supergif

thread about my palette cycler app

Feb 27, 2021

it occured to me as i worked on my palette cycler quantiser thing that mediancut was the wrong thing to use.

or at least, the way i am using it is wrong.

what i was doing was taking an image sequence, an animation, and treating each color a particular pixel becomes as just an extra 3 compknents in a really big color vector, and quantising that set of “big colors” with median cut.

it results in a frosted glass effect, regions become averaged versions of the pixels in that frame

SLIC superpixels compared to state-of-the-art superpixel methods - PubMed

the trouble is the way median cut works is it’s a generic algorithm that takes a set of N-dimensional vectors and sorts them into some fixed number of buckets according to how close they are to each other in that n dimensional space. time, instead of being treated as a single dimension, gets treated as a bunch of dimensions, all centred around each other, bundled together by similar color. 3 new dimensions for each frame

what we really want 6 dimensions total, Lab, phase amplitude frequenxy

so that’s where i got stuck on that project: i need an fft scheme that fits into this problem space.

but what was intriguing is that is exactly the same place i got stuck in my slitscan project.

the sorting of the palette colors accorring to wave properties is exactly the same thing i needed to do with scanlines.

and then it ocxured to me there’s more overlap to these two problems than I originally thought.

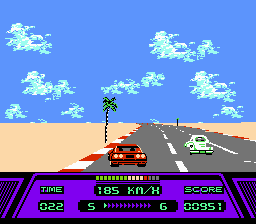

one of the things old videogames would do is modify the palette for each scanline in addition to the scroll register- to e.g. make stripes in rad racer or atmospheric perspective in fzero.

so i thought for my scanline compressor i would come up with something like a palette shift neutral representation + whatever color ops were needed to regain the original colors of the scanline.

taken even further, it occured to me that if your image that you were going to compress with the scanline compressor was just 256 pixels wide, the image data could just be a unique byte per pixel, and each scanline a full 256 color palette change.

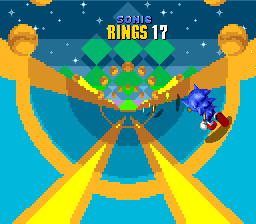

i found out later that this is similar to how gamehut guy achieved the mode7-like effects in mickey mania on the sega genesis.

1

I came across a pico8 “logo compressor” cart which, I don’t know precisely how it worked, but its seemed to be somewhat scanline oriented too.

Meaning that if you had a 2 color logo on an old game system with 16 color tiles, you could compress your fancy logo as a set of scanlines and palette shifts fairly effectively- and even catch vertical symmetries and repeated lines.

according to gamehut guy again, that’s kinda how these sonic special stages worked

I feel like I didn’t quite justify why I went straight from “time is getting quantized wrong” to “I need to use WAVES”. earlier, cos it was kinda hard to explain.

It was just plain human pattern recognition after studying the way my palette cycle quantiser thing worked in a bunch of different experiments.

the common factor in colors going muddy and not animating was animated colors with mismatched phase getting averaged together. This is equivalent to destructive wave interference.

First off, what I was doing wasn’t “true” palette cycling. it was full palette replacement. In true palette cycling, a section of the palette is rotated/shifted. The colors are all reused. Unlike a full palette replacement, it actually saves on memory, if you can pull it off.

thosse indexes then represent a spread of different wave phases. It turns out that having a variety of different wave phases represented in the image is essential for the effect to work. My median cut was smushing them.

the other thing that I screwed up in this thread is that I probably want 9 dimensions not 6. firstly, to treat luminance and colour seperately, but then breaking down each of L,a,b channels to their phase, amplitude and frequency, with some weighting. Merging colours with different phases is worse than merging different amplitudes, or even different colors.

converting them is a lossy operation so I was thinking it would be purely for deciding which color strips are good to average together.

of course, what i really want to do is be flexible in the fft, to have an adjustable number of terms that converges on the original image data, just like in JPEG,

then perhaps have an option to quantise the reproduced wave back to the original indexed color-

which now that i think of it, could be an issue since fft famously doesn’t cope too well with square waves-

which is what pixel art is.

open to suggestions

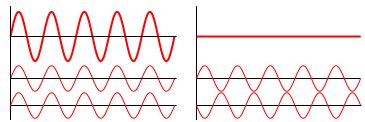

in both the scanline compressor and the animated palette compressor, i have these strings of pixels. rows in the first image, columns in the second.

in both situations i am trying to find similar strings that i can merge, to save on data. in both situations i care about the overall “phase” of the string. in the scanline compressor, i am trying to align phases to compress, restore phases on decompress. in the palette compressor i merged without aligning phase first.

perhaps what i need to do in the palette compressor is the same thing i did for the scanline compressor.

for each line of pixels named lines[i]

- find the average color

- store the average as lines[i].a

- subtract the average from each pixel in lines[ i]

- find the largest ampllitude, wavelength and phase

- store in lines[i] and subtract represented wave from each pixel

- offset each line to align each phase on the centre of each dominant wave

- merge similar neighboring lines

that step 7 is rather complex, because at that point i need to talk about an index map. so

7a. we split linee[] into metalines[] and imagelines[]. each metaline contains our colour average, phase, amplitude, wavelength, and an index into imagelines. imagelines will contain our remainder “conpressed” pixels, and array indexes back to metalines for quick lookup and comparison.

7b. for palettes, maybe median cut in frequency domain. would that work on images? maybe!

7c. alternatively, start looking for pairs of imageline[i], imageline[i+1] where the absolute difference is smallest, (on the overlapped pixels, after offsetting by phase)

7d, when the smallest pair is found, stick them into a bucket. if one of the two is already in a bucket, uhhhh.… decide whether to reuse the bucket or not somehow? a homogeneoty score?

7e. once bucketed to satisfaction. image data lines in each bucket are merged in some way cleverer than just averaging their overlaps

sorry for the thinking out loud brain dump- i mainly wanted to get to this:

the potential for this to find and reproduce looping animations that have different “periods” in them that don’t necessarily need to be multiples of each other, as they would in a traditional loop.

since animation is represented as indexes into fft reconstructions of the original animation, it could be forced to be “lossy” at the loop point and allow each poxel to have its natural dominant wavelength.

should I call these foxels or blinksels?

a zillion tweaks and question marks. should i use some other quantisation method? should i sort by something first? should i use a linear approximation instead of an average so loops have a greater chance of wrapping around seamlessly? how dumb is this datastructure? how do i represent “buckets” ?

the median cut presented a problem before because it expects a set of well scattered vectors on a relatively continous space.

when i gave it a 1 bit animation, it got stuck- because each frame was just a new dimension, it was like i handed it a bundle of vectors all stuck to the corners of an N-dimensional cube. there was no soread of points for it to divide, and on many steps many of the indexes evaluated to equal - a pathological case, that would be common.

with the palette cycle case, i don’t really want to align the phases and merge them. i want to find similar phases and merge them, and identify spreads of phases with other attributrs similar to each other to get as close as possible to a clean smooth rotation in phase in the palette space.

this i have no idea how to do.